Watching foreign films in English has always been tricky—awkward dubbing and distracting subtitles often ruin the experience. But AI-powered dubbing tools like DeepEditor are changing the game, making it possible for actors to look like they’re speaking another language naturally. Could this be the end of bad lip-syncing?

The Problem With Traditional Dubbing

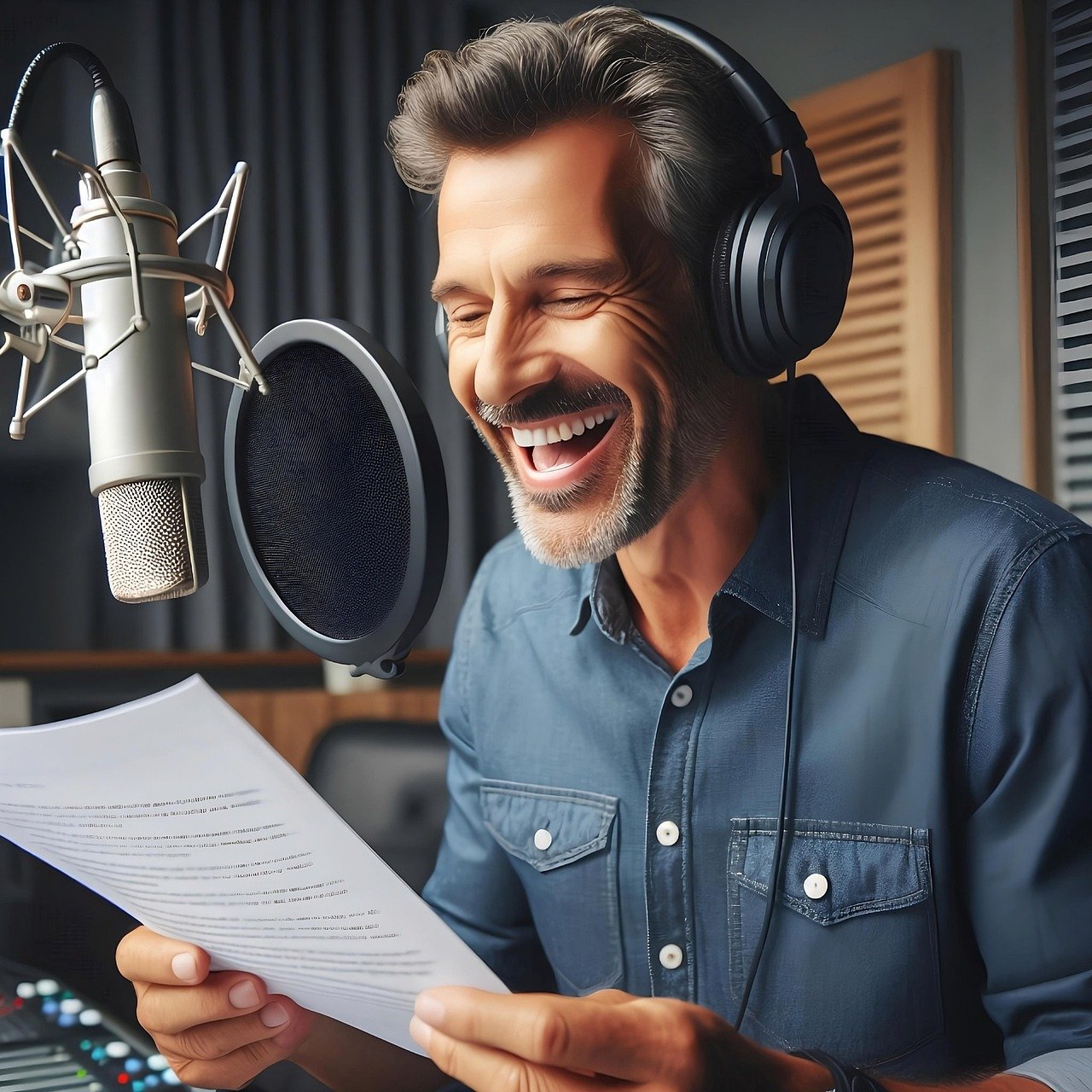

Most dubbing feels unnatural—voices don’t match lip movements, and emotional performances get lost. Scott Mann, co-founder of Flawless (the company behind DeepEditor), noticed this while working on films like Heist with Robert De Niro. “The dubbed versions just didn’t carry the same impact,” he says.

How AI Dubbing Works

DeepEditor uses facial recognition and 3D tracking to adjust actors’ lip movements, making it seem like they’re fluently speaking another language. The Swedish sci-fi film Watch the Skies became the first fully AI-dubbed feature, allowing it to screen in 110 U.S. theaters—something unlikely with subtitles alone.

Why This Matters

- Bigger Audiences: Foreign films often struggle in English-speaking markets. AI dubbing could help them reach more viewers.

- Lower Costs: Reshooting scenes or hiring voice actors is expensive. AI cuts costs while keeping performances authentic.

- Streaming Boom: With platforms like Netflix expanding globally, demand for high-quality dubbing is skyrocketing.

The Concerns: Will AI Erase Cultural Nuances?

Not everyone is excited. Neta Alexander, a film professor at Yale, warns that making every film “sound English” could strip away cultural authenticity. Subtitles, she argues, help viewers engage with foreign languages and preserve storytelling integrity.

The Future of Dubbing

AI won’t replace actors—instead, it gives filmmakers better tools. But as technology improves, the debate continues: Should films adapt to audiences, or should audiences learn to embrace subtitles?