Elon Musk has recently agreed with the growing consensus among AI experts that we may have reached the limit of available real-world data for training artificial intelligence models. During a livestreamed conversation with Stagwell chairman Mark Penn on X, Musk stated, “We’ve now exhausted basically the cumulative sum of human knowledge … in AI training.” He further emphasized that this milestone occurred as early as last year.

This statement aligns with comments made by Ilya Sutskever, the former chief scientist at OpenAI, at the NeurIPS conference in December. Sutskever remarked that the AI industry had reached what he referred to as “peak data” and predicted that the scarcity of training data would lead to a shift in how AI models are developed in the future.

Musk, who also heads the AI company xAI, echoed this sentiment by suggesting that the future of AI training lies in synthetic data. He explained that with real-world data running out, the only viable option would be for AI systems to generate their own training data. “The only way to supplement [real-world data] is with synthetic data, where the AI creates [training data],” Musk said. He believes that with synthetic data, AI could essentially grade and refine itself through a continuous process of self-learning.

This shift toward synthetic data is already happening across the AI industry. Major tech companies like Microsoft, Meta, OpenAI, and Anthropic are all leveraging synthetic data in the development of their AI models. According to Gartner, it is estimated that by 2024, 60% of the data used for AI and analytics projects will be synthetically generated.

Synthetic data has proven to be effective in training large-scale AI models. For example, Microsoft’s Phi-4 model, which was open-sourced earlier this week, was trained using both real-world and synthetic data. Similarly, Google’s Gemma models and Anthropic’s Claude 3.5 Sonnet also employed synthetic data during their training. Meta has fine-tuned its latest Llama models using AI-generated data as well.

In addition to offering a solution to the data shortage, training AI with synthetic data also brings significant cost savings. AI startup Writer, which developed its Palmyra X 004 model almost entirely with synthetic data, reported a development cost of just $700,000. In comparison, estimates suggest that a similarly-sized model developed by OpenAI would have cost around $4.6 million.

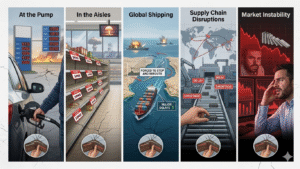

As the AI industry faces a diminishing supply of real-world data, synthetic data is set to play an increasingly crucial role in training AI models. This shift is not only necessary to ensure the continued advancement of AI but also offers the potential for substantial cost reductions in the development process.

In conclusion, with the limits of real-world data now reached, synthetic data is becoming the key to powering the next generation of AI models. As this trend accelerates, we can expect a fundamental shift in how AI systems are trained and how they evolve over time.